Hundreds Of People Are Now Making Fake Celebrity Porn Thanks To A New AI-Assisted Tool

A new type of invasive porn is spreading on the internet. Using controversial technology, celebrities like Daisy Ridley, Natalie Dormer, and Taylor Swift have become unwitting porn stars, their faces realistically rendered into lewd positions and sex scenes, and you could be next.

” The rise of fake celebrity porn the Internet Can’t Handle”

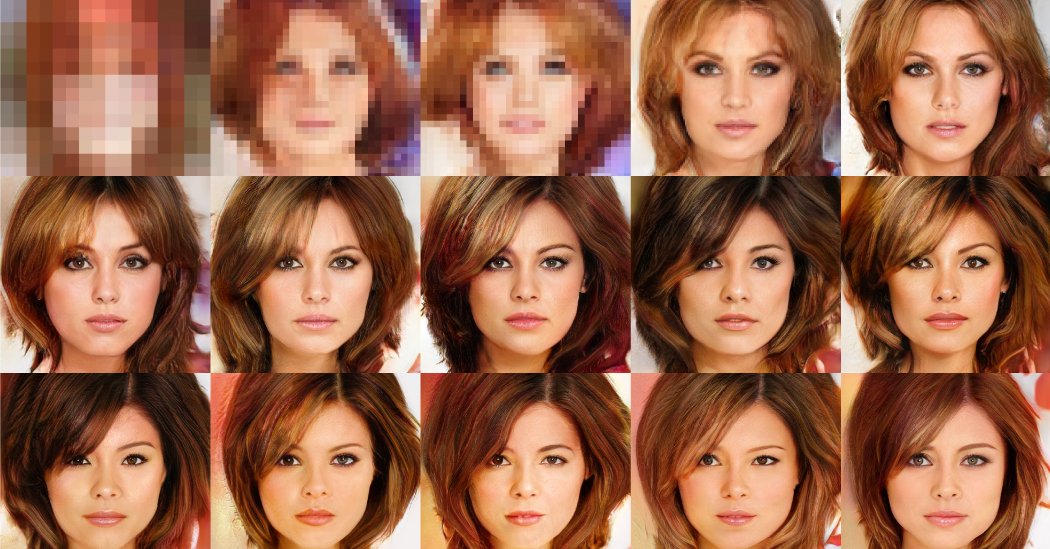

Deepfakes, named after a Reddit user who started the trend, pair GIFs and videos with machine learning to convincingly paste one person’s face onto another person’s body. Anyone with a sufficiently powerful graphics processor and a few hours to kill (or a whole day, for better quality) can make a deepfake porn video. The app has already reached 100,000 downloads, and there’s a growing audience for fake celebrity porn on Reddit and 4chan.

He was using a machine learning algorithm, coupled with his home computer and armloads of images of both faces involved. With a few hours of training, the artificial intelligence is then able to lay the replacement face on top of the old one in dynamic video, making a pretty convincing fake porn video in the process. Now, there are a hundred others doing the same.

Also Read : Late Cuban President Fidel Castro’s First born Son Dead at 68 After Committing Suicide

Another redditor has since created an app, using the same machine learning algorithm, to create AI-assisted porn videos. With its easier user interface, there’s now many others on the platform using it to build their own pornographic creations. In fact, there’s an entire subreddit called deepfakes that’s dedicated to these attempts, both success and failure, and it has close to 30,000 subscribers. Gal Gadot, Maisie Williams, Katy Perry, and Taylor Swift, you can find faked porn videos of all of them, some even incredibly convincing. All you need is the app, photos or video of the celebrity of your choice, and a porn video with a similar-looking performer.

The future of fake porn we predicted is hurtling towards us at blinding speed, and the problem is no country in the world has the laws to deal with this kind of thing. Is it sexual harassment, or just mere defamation? More importantly, how will it affect celebrities and even regular people when this technology progresses to the point where we can’t tell the difference?

To be sure, there are a lot of failed attempts on the subreddit, but some manage to come uncomfortably close to believable. For instance, redditor DaFakir decided to try his hand at faking Katy Perry porn.

The ability to turn anyone into a porn star,

as long as you have enough high-quality images of their face, raises some serious ethical and practical questions. It’s hard to say whether deepfakes are legal, considering they touch unsettled areas of intellectual property law, privacy law, and brand-new revenge statutes that vary from state to state. Regardless, who’s willing to host this stuff? And is it possible to stop it?

Also read : Paris Hilton looks exactly like Kim Kardashian in this new ad and We Can’t Even

Reddit’s r/deepfakes community has received the most attention thus far, thanks to the initial report by Motherboard on Jan. 24 and other press coverage, but posters there are also conflicted about the NSFW nature of their work. The outside attention has been mostly critical, calling out deepfakes as a heinous privacy violation and the beginning of a slippery slope toward fake porn of non-famous people. Revenge porn is already ruining lives and tying the legal system in knots, and porn-making neural networks could severely compound the issue.

Some on r/deepfakes have proposed splitting into two or more subreddits to create a division between those who want to advance facial recognition as a consumer technology and those who just want to jerk off to fake videos of their favorite Game of Thrones actresses.

“This should be someplace that you can show your classroom, or parents, or friends, for a tangible example of what machine learning is capable of, but instead it’s just a particularly creepy kind of porn,” wrote one poster.

“And I say that as someone who has had a kink for fake celebrity porn since 2001,” he added.

PornHub says it is working to take down the AI-made videos.

These rare flashes of conscience are the reason that anonymous posters on 4chan have argued that Reddit shouldn’t be the home of deepfakes. They feel it’s too liberal—too feminist and “social justice warrior,” as the troll parlance goes—to reliably keep the porn coming.

There needs to be a proper website to host this stuff regardless, reddit is full of sjws,” wrote one anonymous user.

Some of the earliest deepfake porn posts have already been removed from Reddit, forcing frantic users to break out their backup copies. (There are always backup copies.)

Complicating matters even further, the fakes are mostly hosted outside of Reddit itself. They were initially being uploaded to popular GIF-hosting site Gfycat, but the site took swift action to remove them. “Our terms of service allow us to remove content we find objectionable,” Gfycat told the Daily Dot via email. “We find this content objectionable and are actively removing it from our platform.”Gfycat doesn’t need to make that call, though—deepfakes violate the site’s terms of service, and they’re being taken down.

Deepfake posters then took to Pornhub, which is one of the largest streaming porn providers online and also allows community uploads. One Reddit poster, who claims to be based in Ukraine, uploaded 27 deepfake porn videos, including some that had been deleted from Reddit.

But while Pornhub has a low tolerance for “nonconsensual” porn like these celebrity deepfakes, it’s also a game of Whack-A-Mole, with new videos continuing to crop up.

“Regarding deepfakes, users have started to flag content like this and we are taking it down as soon as we encounter the flags,”, a Pornhub spokesperson told the Daily Dot. “We encourage anyone who encounters this issue to visit our content removal page so they can officially make a request.”

Thanks To Artificial Intelligence And People Are Still Fapping To It

Sources:

Motherboard, E-writeup , Daily Dot

Reddit user “deepfakeapp,” told Motherboard:

“I think the current version of the app is a good start, but I hope to streamline it even more in the coming days and weeks. Eventually, I want to improve it to the point where prospective users can simply select a video on their computer, download a neural network correlated to a certain face from a publicly available library, and swap the video with a different face with the press of one button. “